Can Quantum Computing Make Encryption Useless?

Quantum computing is a rapidly growing research field which

combines the science of computing with quantum principles such as entanglement

and superposition. Startup companies

investing into quantum computing continue to pop up and Google and IBM invest

millions of dollars into the development of quantum computers. As interest

continues to grow, we should evaluate the direction in which they are headed

and discuss ways in which they could make our security systems unviable.

Figure 1: Interior of an IBM quantum computer. Shown

on flickr.com.

What is Quantum Computing?

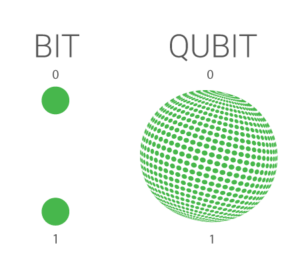

Classical computing uses bits to make computations, in

quantum computing, their counterpart is the qubit. A bit can be in one state at

any point in time, that being either 0 or 1. A qubit is a particle that is in a

state of quantum superposition and has probabilities of being in one of two

states, represented as 0 and 1. It’s important to keep these suspended in their

quantum state because, when observed, the qubit loses its quantum properties

and is statically either 0 or 1.

Figure 2: Visual representation of a bit and a

qubit. Shown on medium.com.

Quantum computing scales at 2n, meaning that for

every qubit that is added to a quantum computer, the number of potential states

scales exponentially. Classical computing scales linearly for every bit added. This

is the power of quantum computing, with the computation scaling exponentially,

quantum computers can compute things much faster with far less overhead. As far

as we know, quantum computers are not able to solve anything that a classical

computer could not, given enough time and resources. The problem with current

quantum computers is that currently the most powerful quantum processor

contains only 127 qubits which is not powerful enough harness the true

potential of quantum computing. This potential computational power has many

thinking about the potential negative and positive uses of this technology.

Many are worried about future quantum computer’s ability to break our current

encryption systems

History of Quantum Computing

Research into the field of quantum computing started as

early as in when Paul Benioff described the first quantum mechanical model of a

computer which laid the foundation for future research into quantum computing[1]. In 1985, Peter Shor discovered a quantum

algorithm that could factor large numbers very quickly[2]. This was a huge discovery because many

encryption systems rely on the hardness of factorization and the discrete log

problem to keep systems secure. Shor’s algorithm could theoretically solve

both with ease, breaking many of the cryptographic system’s that we have in

place. The importance of this discovery brought much more attention and

resources to the field of quantum computing. In fact, this was most likely

among the discoveries that spurred the NSA to create the Penetrating Hard

Targets project which aims to create a quantum computer to crack cryptographic

systems. Shor followed this up the next year with a paper detailing the

creation of quantum error codes[3]. Quantum computers are unreliable due to

the difficulty of sustaining quantum states for long periods of time. The discovery

of quantum error codes would allow for quantum error

correction. With the implementation of quantum error correction, using Shor’s

algorithm to break encryption would be physically viable.

Research and development of quantum computers increased at

a rapid pace since then. Notably, in 2016, Rainier Blatt and Isaac Chuang

realized the first efficient implementation of Shor’s algorithm on

a quantum computer in which they factored the number fifteen[4]. Although unable to factor large numbers

necessary to break encryption, this development

made the threat of Shor’s algorithm more real. Recently, in November 2021 IBM

unveiled their 127-qubit quantum processer “Eagle” which is the most powerful

quantum processor available today[5]. Despite the rapid advancement of quantum

computing in recent years, due to the overhead of implementing quantum error

correction, we are still a considerable way off from a computer capable of

breaking our security systems.

Quantum Decoherence

One of the largest roadblocks faced by quantum computing is

that of decoherence.

Decoherence within a quantum computer happens when a qubit is caused to flip

their quantum state either by external environmental factors or even quantum

logic gates themselves can cause decoherence within qubits.

The discovery of quantum error correction in 1995 was the

crucial discovery necessary to make quantum computers viable. Prior to their

discovery, the future for quantum computing seemed dim as the errors caused by

changes in quantum states seemed irreversible and uncontrollable. This study by

Peter Shor found that the particles decohered coherently which made it possible

to use the states of other qubits to check if others were decohered.[6]This in turn, would make it possible to

correct the qubits that were decohered and attain a correct result. This

largely fueled optimism into the field of quantum computing, as the ability for

controlling errors and reversing them in large-scale quantum computers would be

theoretically possible Although this was an important theoretical discovery,

the technology necessary to implement error codes is not quite there yet. As I

have said before the overhead cost of implementing such error codes is very

large. The most viable of the strategies for error correction are surface

codes.[7] To preserve the computations of qubits,

one must store all the information about the processing qubits in other qubits.

Researchers estimate that millions of qubits would be needed to provide

fault-tolerant computers that could simulate important physics problems or use

Shor’s algorithm for factoring. As such, large-scale quantum computers that are

fault-tolerant are still a distant future. Much research in the short term is

focused on mitigating decoherence as opposed to error correction. Strategies

include creating less noisy qubits[8] or utilizing neural networks to predict

errors.[9]

Quantum Computers and Encryption

Although the execution of algorithms that could crack our

current cryptographic systems is still far away, we know that a sufficiently

powerful quantum computer would break many of the systems we use. RSA

encryption, a popular type of public key encryption relies on the hardness of

factoring a large number for its encryption. With a classical computer it would

take a classical computer over two years to factor a 768-bit number[10]. Using Shor’s factorization algorithm, it

was calculated that on a large-scale fault-tolerant quantum computer it would

only take a bit more than one day to solve[11].

Shor’s algorithm can also be used to efficiently solve the discrete log

problem which is the basis of ECC cryptography. The other known algorithm able

to affect our cryptographic systems is Grover’s search algorithm which can be

used to find the roots of a function in half the time that a classical computer.

This would effectively half the time needed to crack some of our symmetric

encryption protocols, namely AES-128 and SHA-256.

Researchers have proposed multiple solutions to the problem

of post-quantum cryptography throughout the years. Fortunately for us,

public-key and symmetric encryption protocols do exist that stand up to

scrutiny based on Shor’s or Grover’s algorithms. Solutions that consider

Grover’s algorithm resolve the issues by doubling the security level, which has

low costs in symmetric encryption but significantly higher costs in public-key

encryption. For protecting against Shor’s algorithm, multiple proposals exist

such as lattice-based encryption[12], code-based encryption and hash-based

signatures. Lattice-based encryption seeks to address the weaknesses of RSA-encryption

by multiplying matrices instead of prime numbers. There is no known attack

capable of breaking this encryption. The size of encryption keys would have to

be increased in most encryption types to protect against quantum computers.

Other proposals such as code-based encryption and hash-based signatures also

have no known attack that can break them but suffer from efficiency problems as

the increased size of keys costs more overhead on these types of systems

Conclusion

Quantum computers have a ton of potential for the future

and the outlook for the future is bright. Although some skeptics believe that

fault-tolerant quantum computing will never be possible because of the large

amounts of decoherence that comes with a large system of qubits. Despite this, it is important to be aware of

the dangers and prepare for them as research progresses into the development of

quantum computers. It's important to switch over to quantum-proof systems

sooner rather than later as many cryptographic systems require all participants

to use the same cryptographic system. Standardizing and bulletproof testing

these systems in the near future is also important so that they can be widely

implemented throughout the world.

References

[1] P. Benioff,

“The computer as a physical system: A microscopic quantum mechanical

Hamiltonian model of computers as represented by Turing machines,” J. Stat.

Phys., vol. 22, no. 5, pp. 563–591, May 1980, doi: 10.1007/BF01011339.

[2] P. W. Shor, “Algorithms for quantum computation: discrete

logarithms and factoring,” in Proceedings 35th Annual Symposium on

Foundations of Computer Science, Nov. 1994, pp. 124–134. doi:

10.1109/SFCS.1994.365700.

[3] P. W. Shor, “Scheme for reducing decoherence in quantum

computer memory,” Phys. Rev. A, vol. 52, no. 4, pp. R2493–R2496, Oct. 1995,

doi: 10.1103/PhysRevA.52.R2493.

[4] T. Monz et al., “Realization of a scalable Shor

algorithm,” Science, vol. 351, no. 6277, pp. 1068–1070, Mar. 2016, doi:

10.1126/science.aad9480.

[5] P. Ball, “First quantum computer to pack 100 qubits enters

crowded race,” Nature, vol. 599, no. 7886, pp. 542–542, Nov. 2021, doi:

10.1038/d41586-021-03476-5.

[6] P. W. Shor, “Scheme for reducing decoherence in quantum

computer memory,” Phys. Rev. A, vol. 52, no. 4, pp. R2493–R2496, Oct.

1995, doi: 10.1103/PhysRevA.52.R2493.

[7] A. G. Fowler, M. Mariantoni, J. M. Martinis, and A. N.

Cleland, “Surface codes: Towards practical large-scale quantum computation,” Phys.

Rev. A, vol. 86, no. 3, p. 032324, Sep. 2012, doi:

10.1103/PhysRevA.86.032324.

[8] L. Kranz et al., “Exploiting a Single-Crystal

Environment to Minimize the Charge Noise on Qubits in Silicon,” Adv. Mater.,

vol. 32, no. 40, p. 2003361, 2020, doi: 10.1002/adma.202003361.

[9] C. Kim, K. D. Park, and J.-K. Rhee, “Quantum Error Mitigation

With Artificial Neural Network,” IEEE Access, vol. 8, pp. 188853–188860,

2020, doi: 10.1109/ACCESS.2020.3031607.

[10] T. Kleinjung et al., “Factorization of a 768-Bit RSA

Modulus,” in Advances in Cryptology – CRYPTO 2010, Berlin, Heidelberg,

2010, pp. 333–350. doi: 10.1007/978-3-642-14623-7_18.

[11] P. W. Shor, “Polynomial-Time Algorithms for Prime Factorization

and Discrete Logarithms on a Quantum Computer,” SIAM J. Comput., vol.

26, no. 5, pp. 1484–1509, Oct. 1997, doi: 10.1137/S0097539795293172.

[12] J. Hoffstein, J. Pipher, and J. H. Silverman, “NTRU: A

ring-based public key cryptosystem,” in Algorithmic Number Theory,

Berlin, Heidelberg, 1998, pp. 267–288. doi: 10.1007/BFb0054868.

No comments:

Post a Comment